Advertisement

In today's fast-evolving tech world, the demand for scalable, transparent, and ethical AI systems has never been higher. With open source gaining traction as the preferred foundation for AI innovation, major industry players are stepping up to shape its future. IBM and Red Hat, long-standing champions of open collaboration, are now pushing the boundaries of artificial intelligence with their latest initiative: RHEL AI.

This article explores how IBM and Red Hat are leading the charge in open source AI, what RHEL AI means for developers and enterprises, and why this move could redefine enterprise-grade AI solutions.

Red Hat Enterprise Linux AI (RHEL AI) is an open source AI development platform introduced by IBM in collaboration with Red Hat. Built on the reliable foundation of Red Hat Enterprise Linux, RHEL AI combines an optimized Linux operating system with InstructLab, a machine learning framework co-developed by IBM and Red Hat. The platform allows organizations to build, fine-tune, and deploy large language models (LLMs) using open source tools and a community-driven development model.

RHEL AI emphasizes transparency, flexibility, and portability, unlike proprietary AI platforms. It enables developers to train domain-specific models without handing their data to third-party vendors. This combination of control and openness is ideal for businesses prioritizing data security and AI ethics.

IBM and Red Hat's collaboration brings a suite of advantages to developers, data scientists, and enterprises:

RHEL AI includes two major components:

With this architecture, businesses can download pre-trained models, refine them with company-specific data, and deploy them across on-prem, cloud, or hybrid infrastructures. The entire process remains transparent, auditable, and fully controlled by the organization.

IBM and Red Hat's entrance into open source AI is more than another product launch. It reflects a shift in how AI is developed, governed, and deployed at scale. Here's how their tools are changing the game:

By making advanced AI tools accessible and customizable, IBM and Red Hat empower smaller organizations and individual developers to innovate. You no longer need massive resources to build powerful language models tailored to your needs.

Traditional model fine-tuning often requires extensive data and computing power. RHEL AI's InstructLab drastically reduces those requirements using synthetic data and targeted instruction tuning, making personalization easier and more cost-effective.

InstructLab's contribution model invites developers and researchers to submit prompt-target pairs and glossary entries, enabling a shared effort to train better, safer models. This open collaboration fosters rapid improvements and accountability.

With Red Hat OpenShift integration, RHEL AI is designed to run on public cloud, private data centers, or a hybrid setup. This ensures businesses can choose the best environment for their security, compliance, and performance needs.

IBM has long advocated for trustworthy AI. With RHEL AI, organizations gain full visibility into how their models are trained, what data is used, and how outputs are generated—key principles in ensuring AI fairness, accountability, and explainability.

RHEL AI leverages Red Hat's proven security protocols, including SELinux, access controls, and patch management. This allows businesses to scale AI without compromising enterprise-grade security and compliance requirements.

From customer support bots and content generation to code completion and medical research, RHEL AI's flexible architecture supports a wide range of applications. Industries such as healthcare, finance, education, and legal services stand to benefit greatly from domain-specific AI.

IBM and Red Hat provide developers with modular, customizable AI toolchains that integrate seamlessly with open-source ecosystems. This flexibility accelerates innovation, fosters community contributions, and lowers the barrier to entry for building AI solutions.

The timing of IBM and Red Hat's initiative is crucial. As more organizations seek AI solutions that are trustworthy, explainable, and free of ethical blind spots, the need for open source alternatives is growing rapidly. RHEL AI meets this need while offering the performance, scalability, and support required in enterprise environments.

It also reflects a broader industry trend: the decentralization of AI development. Instead of relying solely on massive tech firms for foundational models and APIs, businesses now have tools to train their custom AI systems in-house. This shift could lead to safer, more ethical, and more effective AI deployments across industries.

RHEL AI is more than a product—it's a movement toward responsible, scalable, and accessible artificial intelligence. IBM and Red Hat are showing that open source isn't just about freedom of code—it's about empowering innovation, enhancing security, and giving organizations full control over their AI journey.

Now is the time to explore how your business can benefit from open source AI tools. Whether you're a developer, decision-maker, or tech leader, embracing platforms like RHEL AI can help you stay ahead of the curve and build smarter, safer, and more sustainable AI solutions.

Advertisement

Knime enhances its analytics suite with new AI governance tools for secure, transparent, and responsible data-driven decisions

Discover how IBM and Red Hat drive innovation in open source AI using RHEL AI tools to power smarter enterprise solutions.

Explore the various ways to access ChatGPT on your mobile, desktop, and through third-party integrations. Learn how to use this powerful tool no matter where you are or what device you’re using

Wondering how ChatGPT can help with your novel? Explore how it can assist you in character creation, plot development, dialogue writing, and organizing your notes into a cohesive story

Curious about AI prompt engineering? Here are six online courses that actually teach you how to control, shape, and improve your prompts for better AI results

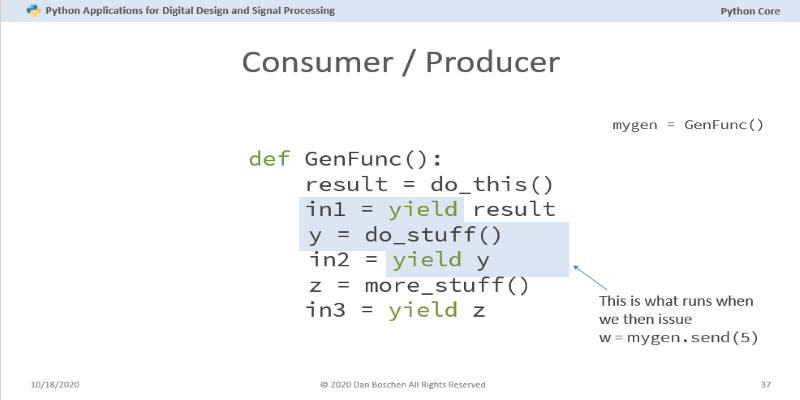

Curious about Python coroutines? Learn how they can improve your code efficiency by pausing tasks and running multiple functions concurrently without blocking

Curious about LPU vs. GPU? Learn the real differences between a Language Processing Unit and a GPU, including design, speed, power use, and how each performs in AI tasks

Think My AI is just a fun add-on? Here's why Snapchat’s chatbot quietly helps with daily planning, quick answers, creativity, and more—right inside your chat feed

Cisco’s Webex AI Assistant enhances team communication and support in both office and contact center setups.

Many organizations still lag in adopting AI due to reluctant leadership, fear of unexpected outcomes, and lack of expertise

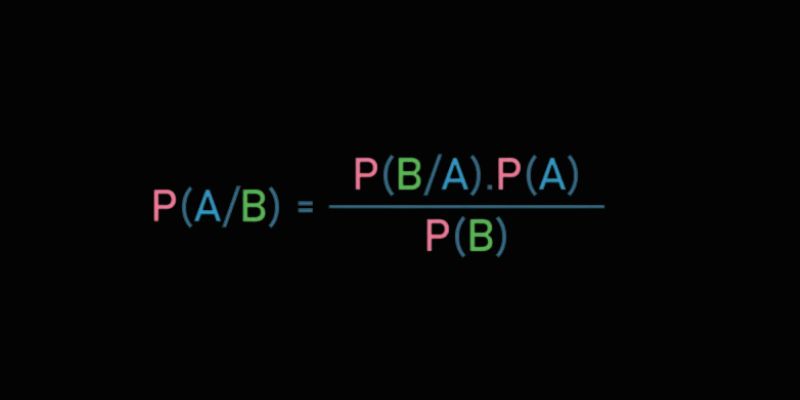

Learn Bayes' Theorem and how it powers machine learning by updating predictions with conditional probability and data insights

Case study: How AI-driven SOC tech reduced alert fatigue, false positives, and response time while improving team performance