Advertisement

Bayes' Theorem is a fundamental concept in probability theory. It helps determine the probability of an event based on prior knowledge and new evidence. In machine learning, It becomes quite a useful tool for prediction. It updates predictions based on new data. Models update the probability of outcomes based on observed data. This strategy leads to more informed decisions. It backs up several practical uses. For medical diagnostics and spam identification, for instance, it is vital. Data-driven forecasts evolve as more information becomes available.

As data increases, the results become even more accurate. Bayesian thinking ensures real-time information is reflected in forecasts. It links recent observations to historical trends. The approach improves AI judgment. Systems of machine learning get improved understanding. Through constant forecast updating, they learn.

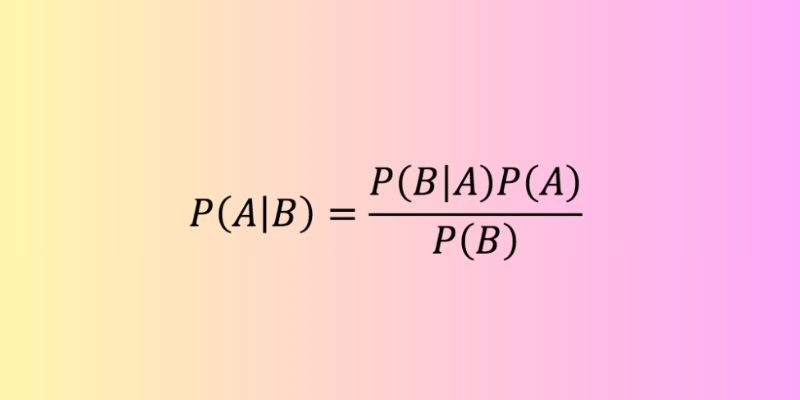

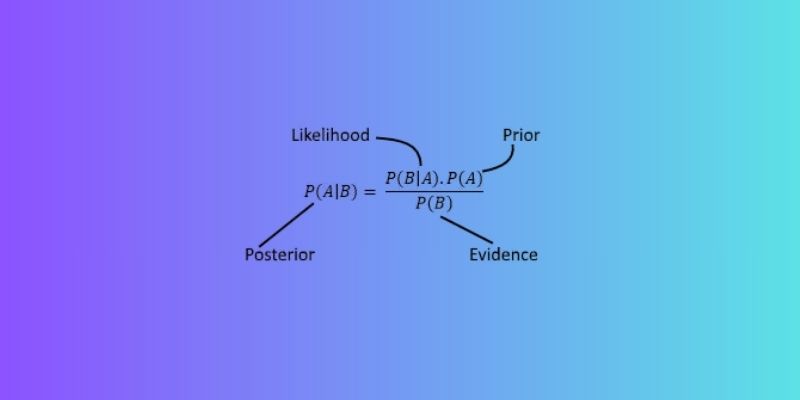

Bayes' Theorem explains how one should change probability in light of fresh data. It is expressed as: P(A|B) = \[P(B|A) \ P(A)] / P(B). This formula calculates the probability of A given B by using the likelihood of B given A, the prior probability of A, and the marginal probability of B. The Theorem ties marginal and conditional probability. It allows data updates to help us polish ideas. It is important in machine learning. It is fundamental in many classification issues. It is the cornerstone of Bayesian models.

These models change with data. They do not initially call for big datasets. Still, few samples have significant results. The approach changes with time. Systems dynamically update projections. Probabilities are always recalculated. The Bayes method is perfect for uncertainty. It provides a rational framework for conceptualizing uncertainty and updating beliefs. The approach is strong and versatile as well. Transparent and interpretable are traits of Bayesian models.

Bayes's Theorem combines two fundamental ideas—prior and posterior probability. Prior refers to current belief before fresh data. The revised belief after adding the fresh data is posterior. Models of machine learning mostly depend on this updating mechanism. They modify projections using fresh inputs. Prior beliefs can be weak or strong. Accuracy grows with every observation. Learning algorithms are mostly based on this adaptive character. In practical work, uncertainty is not unusual. Bayesian revisions treat it elegantly.

Models remain updated using data that changes. Over time, posterior probability gets more exact. It exhibits better knowledge as input increases. The key is to achieve a balance between past and fresh data. Some models give more weight to new data than to prior beliefs. Others rely more on first presumptions. The type of the problem will determine this equilibrium. Domain knowledge also helps to define it. Bayesian techniques allow for that adaptability. In ambiguous surroundings, they help to direct better decisions.

Built upon Bayes' Theorem, Naive Bayes is a machine learning algorithm. It regards independence among input variables. In actuality, it works well despite this "naive" assumption. It's popular for classification tasks. Two such are sentiment analysis and email filtering. Large datasets specifically make it quite helpful. The training pace is quick and effective. Predictions rely on conditional probabilities. It manages data in both continuous and categorical ways.

In some uses, accuracy is strong. Though basic, the model is really powerful. Usually, it beats more intricate models. It works best under the independence assumption. Even when assumptions are violated, the model still performs reasonably well. The model figures every class's probability. It chooses the one having the best chance. Implementable and understandable, naive Bayes is easy. It provides a reasonable basis. Many beginners start their machine-learning journey with it. For many tasks, It's a reliable baseline.

Bayesian networks expand the Theorem of Bayes into intricate graphical models. Nodes symbolize variables. Edges represent dependencies. The model expresses all variables' combined distribution. When factors interact, it's helpful. These networks predict results in machine learning depending on input factors. Their probabilistic approach helps them to control uncertainty. Common fields include engineering, finance, and medicine.

A diagnostic system can, for instance, simulate diseases and symptoms. It deduces the most likely ailments based on reported symptoms. One can learn networks both manually created or from data. When causal links exist, they perform nicely. New data lets users change their opinions. They back learning as well as inference. Difficult systems start to make sense. Probabilities pass via the network architecture. They guide decisions made under uncertainty. Missing data is elegantly handled in Bayesian networks. They mix domain knowledge as well. This results in their great flexibility. They provide a strong approach to handling reasoning tasks.

Many practical machine learning applications find use for Bayes' Theorem. Spam detection is one such. Keywords help to classify emails as either spam or not spam. Medical diagnosis also finds application here. Test findings allow doctors to update illness probability. Also rather prevalent is fraud detection. Bayesian models help banks to examine transaction trends. These models learn over time questionable behavior. Analogous techniques are used in weather prediction. Models are integrating historical and present data to forecast rain.

Bayes guides content filtering in recommendation systems. Suggestions for a movie or a product depend on user behavior and comments. Search engines use Bayesian ranking. First, the most pertinent results show up. It also finds use in language models. Drawing on phrase context, they forecast upcoming words. Self-driving cars use Bayesian reasoning. They assess challenges and choose a direction of action. In the financial sector, it forecasts stock movements. New market data changes the probabilities. Bayes is the most flexible tool available.

Machine learning depends much on Bayes' Theorem. It offers a rational approach to change forecasts. Models in machine learning change with new evidence using conditional probability. Over time, they keep their accuracy. Uses range from artificial intelligence to business to medicine. Models develop with data. Making decisions gets more intelligent and consistent. Systems work despite little input. For data science, Bayes' Theorem provides consistency and organization. The method helps actual advancement in artificial intelligence research. Real-world Bayesian applications exhibit the adaptability and applicability of the technique. Bayesian thinking gives results and forecasts confidence.

Advertisement

Know how AI-powered advertising enhances personalized ads, improving engagement, ROI, and user experience in the digital world

Discover 8 powerful ways AI blurs the line between truth and illusion in media, memory, voice, and digital identity.

Struggling with synth patches or FX chains? Learn how ChatGPT can guide your sound design process inside any DAW, from beginner to pro level

Explore the key differences between class and instance attributes in Python. Understand how each works, when to use them, and how they affect your Python classes

Explore the various ways to access ChatGPT on your mobile, desktop, and through third-party integrations. Learn how to use this powerful tool no matter where you are or what device you’re using

Wondering if third-party ChatGPT apps are safe? Learn about potential risks like data privacy issues, malicious software, and how to assess app security before use

Want to create marketing videos effortlessly? Learn how Zebracat AI helps you turn your ideas into polished videos with minimal effort

Explore how IBM's open-source AI strategy empowers businesses with scalable, secure, innovative, and flexible AI solutions.

Think My AI is just a fun add-on? Here's why Snapchat’s chatbot quietly helps with daily planning, quick answers, creativity, and more—right inside your chat feed

Curious about AI prompt engineering? Here are six online courses that actually teach you how to control, shape, and improve your prompts for better AI results

Trying to choose between Bard, ChatGPT, and offline Alpaca? See how these language models compare in speed, privacy, accuracy, and real-world use cases

Discover how IBM and Red Hat drive innovation in open source AI using RHEL AI tools to power smarter enterprise solutions.