Advertisement

When you’re feeling unwell or trying to figure out a strange symptom, it’s tempting to type your concerns into a chatbot and get an instant answer. It feels quick, easy, and private. But there’s a gap between convenience and reliability, especially when it comes to your health. While ChatGPT can sound confident and polished, there are several reasons why using it for medical advice can be misleading, even risky.

ChatGPT has no access to your individual health records, habits, or genetic history. It can't take your pulse, check your temperature, or review your test results. It makes educated guesses based on patterns and general knowledge, not on your body, your situation, or your past. So even if the response sounds correct, it might not be relevant to you at all. An actual diagnosis is more than matching symptoms. It's about context, observation, and knowing the whole picture. That's something no chatbot can accomplish.

One of the most difficult aspects of ChatGPT is how believable it is. Even when it's incorrect, it gives you the information in a manner that seems absolute. There are no pauses, no warning signals, no change in tone. It simply provides you with an answer as if it were a fact. This produces a false sense of security. You may say, "Well, it seems correct," and do so — when, actually, the advice may be outdated, applied in the wrong context, or entirely off target.

Medical knowledge changes constantly. New treatments come up. Guidelines shift. Risks get re-evaluated. ChatGPT’s training isn’t live — it’s based on snapshots of available data up to a certain point in time. That means even if it gives information that was once considered correct, that info might be outdated. In medicine, timing matters. A piece of advice that was fine a year ago might not be the best approach now. If you’re making health decisions, you need up-to-date knowledge, not past summaries.

When doctors assess symptoms, they aren’t just looking for the most common match. They’re trained to spot red flags — those subtle signs that suggest something more serious might be going on. ChatGPT doesn’t have that kind of judgment. If your symptoms don’t fit a typical pattern, or if there’s a rare condition at play, there’s a strong chance it will miss it altogether. It might even tell you to wait it out, when what you really need is urgent care.

If a doctor makes a mistake, there’s a system in place. You can ask questions, follow up, get second opinions, and file complaints. But when ChatGPT gives wrong advice, there’s no path for follow-up. You can’t hold it responsible. You can’t ask it to check your lab results again. You’re left on your own with no real support. And in health matters, being on your own with a wrong answer is never a good place to be.

Medical conditions often overlap. A single symptom can point to dozens of different possibilities. ChatGPT might give you a straightforward answer, but your situation might not be straightforward at all. It’s not uncommon for people to have multiple conditions happening at once, or symptoms that don’t fit neatly into any textbook category. A trained medical professional knows how to handle that messiness. A chatbot doesn’t. It tries to simplify — and in health matters, oversimplification can lead to the wrong path.

Beyond facts and symptoms, medical care is about people. Doctors listen to how you talk about your pain, how anxious you seem, and how your symptoms have changed over time. These things aren’t captured in a text box. ChatGPT doesn’t understand tone, emotion, or the small details that matter in a clinical setting. It doesn’t care if you’re scared or confused. It doesn’t pause to ask better questions. Its answers are fixed and surface-level. And when you're not feeling your best, a surface-level response isn't enough.

Some symptoms are harmless. Others might look harmless, but point to something more serious. Doctors are trained to balance these possibilities and weigh the risks. ChatGPT doesn't actually understand what risk means — not in a human sense. It doesn't worry about missing something. It doesn't feel urgent. It doesn't get concerned if something doesn't add up. This means its guidance might come across as calm and measured, when what you actually need is a strong recommendation to see a doctor now.

ChatGPT learns from existing content. That includes both good and bad information. If certain medical topics are overrepresented or misrepresented online, the model can reflect those same patterns in its answers. This could lead to underestimating certain conditions in women, dismissing symptoms in minority populations, or repeating harmful stereotypes. Bias in medicine is already a problem, and using a tool trained on biased sources can make it worse.

Even the most experienced doctor wouldn’t make a final call based only on what you say. They’d send you for tests, imaging, or further consultations. That process matters. It’s how they confirm or rule things out. ChatGPT doesn’t do that. It gives a snapshot based on what you type in — and that’s it. It doesn’t push you for more. It doesn’t follow up in a week. There’s no progress tracking. No deeper look. Just a reply in a box. And that’s not enough when your health is on the line.

ChatGPT might be a helpful tool for general information, but it’s not a substitute for real medical care. The answers may sound polished, but they lack depth, context, and the ability to respond to nuance. When it comes to your health, guessing isn’t good enough. You need someone who knows what to look for — and what to worry about — not a chatbot generating text based on patterns. If something feels off in your body, talk to a licensed professional. They’re trained for this. ChatGPT isn’t.

Advertisement

Wondering how ChatGPT can help with your novel? Explore how it can assist you in character creation, plot development, dialogue writing, and organizing your notes into a cohesive story

Explore the various ways to access ChatGPT on your mobile, desktop, and through third-party integrations. Learn how to use this powerful tool no matter where you are or what device you’re using

Pinecone unveils a serverless vector database on Azure and GCP, delivering native infrastructure for scalable AI applications

Discover how IBM and Red Hat drive innovation in open source AI using RHEL AI tools to power smarter enterprise solutions.

Discover how Sprinklr's AI digital twin revolutionizes customer experience through real-time insights and automation.

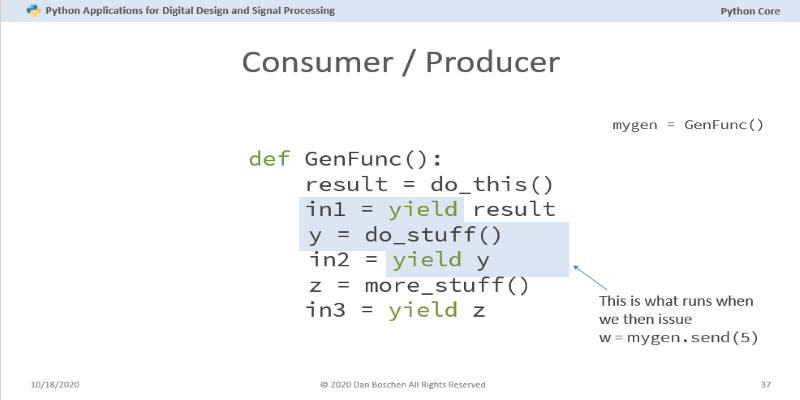

Curious about Python coroutines? Learn how they can improve your code efficiency by pausing tasks and running multiple functions concurrently without blocking

Curious about AI prompt engineering? Here are six online courses that actually teach you how to control, shape, and improve your prompts for better AI results

Explore the key differences between class and instance attributes in Python. Understand how each works, when to use them, and how they affect your Python classes

Want to create marketing videos effortlessly? Learn how Zebracat AI helps you turn your ideas into polished videos with minimal effort

Struggling with synth patches or FX chains? Learn how ChatGPT can guide your sound design process inside any DAW, from beginner to pro level

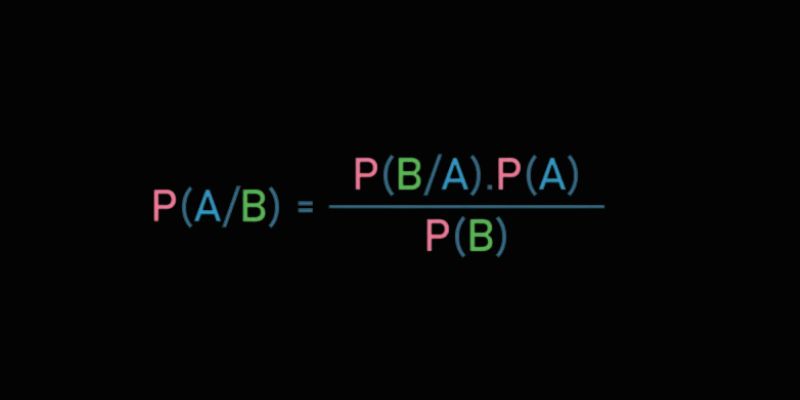

Learn Bayes' Theorem and how it powers machine learning by updating predictions with conditional probability and data insights

Many organizations still lag in adopting AI due to reluctant leadership, fear of unexpected outcomes, and lack of expertise