Advertisement

Nexla's integration with Nvidia NIM represents a significant advancement in the development of artificial intelligence in business. More scalable AI data pipelines are now available to companies, enabling quick model iteration and simpler implementation of ideas. Nexla's no-code technique complements Nvidia's sophisticated hardware and software set quite well. This integration speeds up time to market for artificial intelligence applications and simplifies data flow.

Having fast, consistent, scalable platforms is absolutely vital as more businesses use artificial intelligence. Nexla streamlines data management, improving the efficiency of AI workflows across industries. Taken together, they create an environment free of obstacles where artificial intelligence might grow. Nexla and Nvidia are a perfect fit for contemporary businesses because of the power of automation and smart infrastructure.

Nexla provides automated solutions for creating and controlling data pipelines, therefore enabling companies. Its no-code system streamlines access to both unstructured and structured data from many sources. For applications involving enterprise-scale artificial intelligence, Nexla is perfect since it can interface with APIs, cloud platforms, databases, and more. It recognizes information automatically and adjusts to fit changing schemas without human help. It greatly lessens the requirement for hand-made corrections or debugging.

Every pipeline is guaranteed to be of quality and integrity via real-time transformation and data validation. Nexla's built-in governance systems enforce compliance guidelines and security policies, therefore helping companies maintain data trust. Faster data availability, clearer inputs, and reusable logic blocks simplify development and help AI teams. The platform also enhances cooperation among analysts, data engineers, and machine learning engineers. Nexla simplifies complex data tasks, allowing teams to focus on model design and deployment. It raises operational effectiveness and speed of invention.

Nvidia NIM provides a robust infrastructure for deploying AI models at scale. It presents models as ideal containers, ready for use anywhere—on-premises or in the cloud. These hardware-accelerated containers guarantee faster processing, leveraging Nvidia's potent GPUs. NIM lets developers handle performance, dependability, and deployment logistics. The flexible containers support main frameworks, including TensorFlow, PyTorch, and ONNX. Pre-built setups and scalable execution help NIM greatly shorten setup time.

Additionally, APIs and SDKs are included by Nvidia NIM for flawless integration into corporate settings. Testing, deployment, and updates across AI systems can be automated using these tools rather easily. The platform guarantees models run effectively and are free from infrastructure impediments. NIM provides continuous performance and dependability for companies handling significant artificial intelligence loads. Letting containerized models run across hybrid and multi-cloud systems streamline operations. With tools of enterprise-grade quality, this speeds innovation and supports modern artificial intelligence needs.

Using Nexla's data management alongside Nvidia Model deployment by NIM generates a flawless AI development environment. Nexla ensures the data is clean, well-structured, and fully validated before reaching the model. Without human effort, Nvidia NIM manages GPU acceleration, container management, and model inference. With higher-quality data, the integration reduces development time and improves model accuracy. Nexla's versatile data pipelines make batch and real-time processing both possible.

The containerized architecture of Nvidia guarantees scalable and consistent running of artificial intelligence models. Together, they streamline processes so teams may iterate more quickly and with more confidence. Low-level integration problems are no longer a cause for concern for data scientists. Engineers get access to pipeline health, model performance, and data accuracy. Together, Nexla and NIM offer ongoing delivery, which helps companies implement changes regularly. More responsive and clever apps follow from this. Working together with data and deployment opens fresh possibilities for large-scale corporate artificial intelligence innovation.

Nexla and Nvidia NIM let retail firms use real-time consumer data to forecast demand. Nexla turns point-of-sale and inventory data into something fit for artificial intelligence. Predictive models driven by NIM enable optimal inventory and pricing policies. Integration in healthcare helps to provide scalable, safe processing of patient records and diagnostic pictures. While NIM effectively uses medical AI models, Nexla guarantees sensitive data is correctly controlled.

Feeding streaming transaction data into AI algorithms, financial institutions utilize the combo to identify fraud. Nexla handles sensor data from factory floors in production and forwards it to models in NIM to forecast equipment breakdown. For more intelligent delivery, logistics firms examine route data and shipment trends. Faster insight, better efficiency, and lower running costs help every sector. Nexla and Nvidia NIM help companies respond to data-driven insight confidently and with control over the whole AI lifecycle in real-time.

Creating AI models calls on accurate data, modern infrastructure, and ongoing improvements. Nexla and Nvidia NIM attend to these requirements. From beginning to end, Nexla streamlines data collection, transformation, and governance. The runtime environment NIM offers lets models be tested, implemented, and watched over. Teams no longer squander time troubleshooting deployment faults or repairing damaged data pipelines. Nexla responds instantly to schema changes; NIM guarantees good availability and performance. They encourage speedier experimentation and feedback loops to be taken together.

Teams in machine learning can rapidly iterate on models under less overhead. Using both systems makes continuous integration and delivery simpler to control. Nexla reduces data lag by feeding straight to the NIM container's clean data. Developers enjoy reliable, scalable environments free of DevOps support. From data intake to model serving, the entire cycle is shortened. By removing complexity from AI processes, Nexla and NIM enable teams to focus on boosting output and delivering business value.

Nexla's relationship with Nvidia NIM signals a turning moment for corporate artificial intelligence development. Creating and maintaining scalable artificial intelligence data pipelines is today easier. Faster deployment, clearer data, and real-time performance of Nvidia NIM integration help teams in several ways. Businesses can now automatically implement artificial intelligence models and replace labor-intensive manual chores. This potent mix guarantees that AI initiatives provide results quickly and effectively. Companies become free to innovate with assurance. Nexla and Nvidia NIM will be essential enablers of intelligent systems that are ready for use in the future as artificial intelligence acceptance increases.

Advertisement

Wondering how ChatGPT can help with your novel? Explore how it can assist you in character creation, plot development, dialogue writing, and organizing your notes into a cohesive story

Learn why businesses struggle with AIs: including costs, ethics and ROI, and 10 things they can do to maximize output.

Learn the 10 best AI podcasts to follow in 2025 and stay updated with the latest trends, innovations, and expert insights.

Ever wanted to make lip sync animations easily? Discover how Gooey AI simplifies the process, letting you create animated videos in minutes with just an image and audio

Want to create marketing videos effortlessly? Learn how Zebracat AI helps you turn your ideas into polished videos with minimal effort

Knime enhances its analytics suite with new AI governance tools for secure, transparent, and responsible data-driven decisions

Nexla's Nvidia NIM integration allows scalable AI data pipelines and automated model deployment, boosting enterprise AI workflows

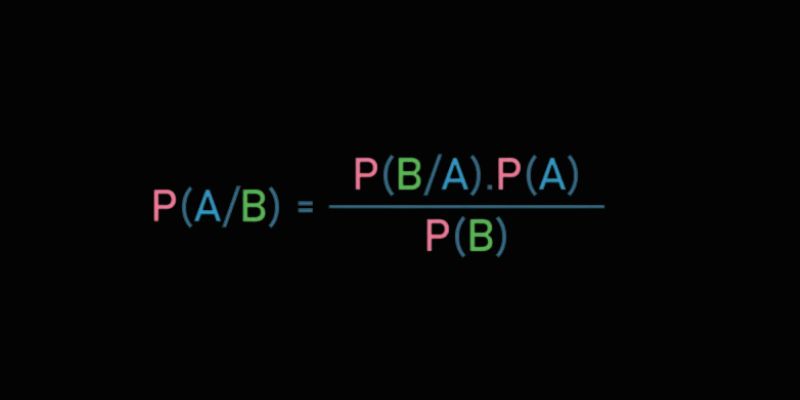

Learn Bayes' Theorem and how it powers machine learning by updating predictions with conditional probability and data insights

Struggling with synth patches or FX chains? Learn how ChatGPT can guide your sound design process inside any DAW, from beginner to pro level

Discover how IBM and Red Hat drive innovation in open source AI using RHEL AI tools to power smarter enterprise solutions.

Can AI-generated games change how we play and build games? Explore how automation is transforming development, creativity, and player expectations in gaming

Cisco’s Webex AI Assistant enhances team communication and support in both office and contact center setups.